Exploring Other Neural Network Architectures

1. Recurrent Neural Networks Part 1

While effective in certain tasks, traditional neural networks are not optimal in other situations. One good example would be sequential data (hint: this is where recurrent neural networks come in)!

So, what is sequential data?

Sequential data refers to a type of data where the order or arrangement of elements carries significance and meaning. In sequential data, each element is positioned in a specific order, and the relationships between these elements are defined by their sequential arrangement.

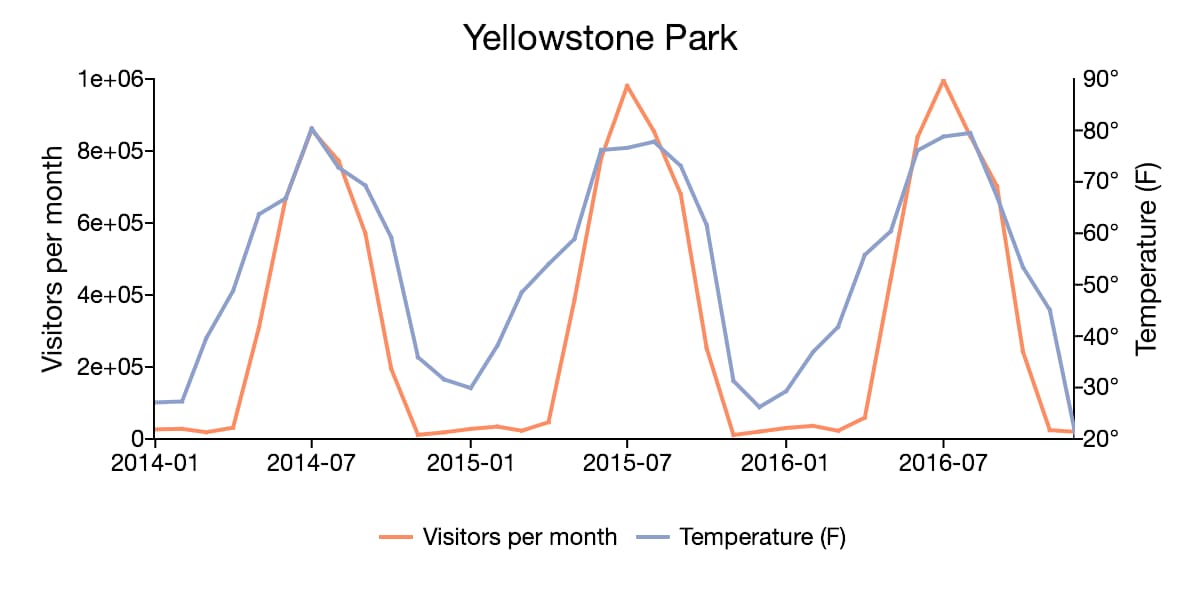

Time series data is a type of sequential data that is defined as data collected over successive time intervals. Examples of that would include stock prices recorded over a time period, temperature measurements over time, or the number of visitors to Yellowstone over a few years.

Why are traditional neural networks ineffective here?

Traditional neural networks or feedforward neural networks, process data in a straightforward manner, treating each input as an independent entity. This quirk, while effective for static data, isn’t as effective when dealing with sequences (remember with sequences the order and context of information matters).

A good example of this would be processing a sentence – the arrangement of words holds meaning, an important feature invisible to a traditional neural network.

Given the advent of time series data, we’re led to the idea of Recurrent Neural Networks (RNNs).

Designed to address the shortcomings of traditional neural networks, RNNs introduce the concept of memory into neural network architectures. This allows RNNs to “remember” information from previous features in a sequence, allowing them to understand context.

Thus, unlike feedforward neural networks, RNNs can understand the relationships between elements in a sequence, making them particularly effective while handling dynamic data like time series, speech, and natural language.

Throughout this section, we’ll explore how RNNs do this!